Six months ago we published an article about artificial intelligence in software testing and outlined in broad terms how it might be used and what advantages it might bring. The article concluded “AI is still in its early stages of development and it will take some time for these technologies to mature.” Little did we know that just a month later the ChatGPT AI system would be released to amazement at its capabilities, and a mixture of excitement and trepidation about what this new technology may hold for the future.

What is ChatGPT?

What is ChatGPT? ChatGPT is what is known as a Large Language Model. I asked ChatGPT what that means:

A large language model is an artificial intelligence (AI) system that is trained on massive amounts of textual data, such as books, articles, and websites, in order to learn the patterns and structures of language. The goal of a large language model is to enable computers to understand and generate human-like language in a way that is contextually appropriate and semantically accurate.

In essence it can understand written language and also write it. But that’s not all. What can it do with this capability? I asked:

Understanding natural language

Generating human-like responses

Completing and predicting text

Translating between languages

Answering questions

Recognising sentiment

Summarising text

No mention of software testing, but could we take what it can do and use those skills to help us?

The Potential

With any system specification methodology (say BDD) we will be writing down how we want the system to work and what outcomes it should produce. If we give those written specifications to ChatGPT it is not beyond the scope of its capabilities to use its natural language processing to understand the requirements and specifications and then generate test cases based on those requirements. With its ability to summarise it could describe the steps and expected outcomes of the test cases in a clear and concise manner.

ChatGPT can be trained on existing test scenarios and scripts to reproduce standard styles and formats. Furthermore its ability to digest large amounts of information may enable it to more readily identify edge cases and detect potential bugs before any hands on testing has been done. Whilst this use of the system is not clearly established the potential for efficiencies and cost savings is clear to see.

As well as assisting in the creation of test cases and documentation it is not difficult to envisage ChatGPT playing a more active test role when it comes to the testing of interactive automated customer relations systems i.e. chatbots.

A Simple Example

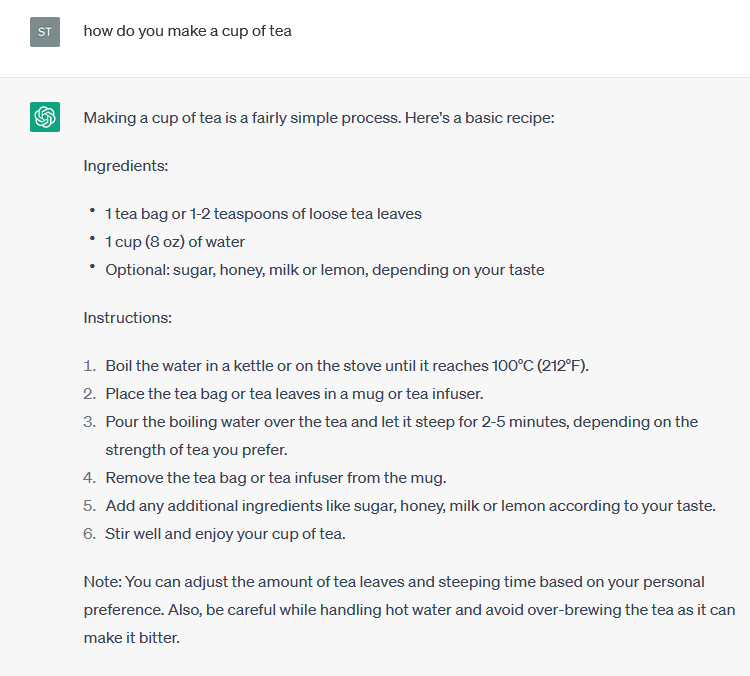

With behaviour driven development we articulate the behaviour of the system we are developing in an informal, natural language to express how the product should work and what its outcomes should be. Typically we make use of a domain specific language such as Gherkin to define test cases sufficient for a developer to work with, but in language plain enough to be read by non-technical users. Can ChatGPT help us with this?

As a trivial example, I asked ChatGPT how to make a cup of tea. This was its response.

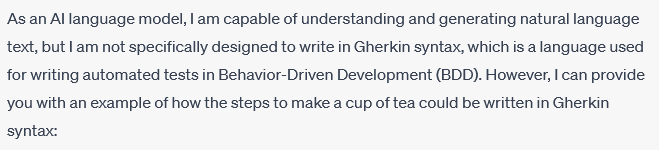

If I wanted to develop this as a software system I might take this set of steps and write it in Gherkin. So I asked ChatGPT to do that for me.

First, ChatGPT told me that writing Gherkin wasn’t something it was trained to do.

It then went on to produce this

Despite its reservations it’s fair to say that is a more than adequate Gherkin definition of making a cup of tea. For complete clarity, these were the first responses ChatGPT gave me. I didn’t have to train it or give it any supplementary information. The potential for a Gherkin trained ChatGPT taking written specifications and generating Gherkin text is just one example of where this technology could lead.

The Challenges

ChatGPT isn’t flawless. Indeed its developers caution that it occasionally produces “inaccurate information”. There are plenty of examples online of ChatGPT misinterpreting the intentions of the user, getting things wrong and in some case even making things up. So there’s no guarantee that any test script it generated would be correct.

ChatGPT is a language model, so all inputs have to be text based. Any specification that had a graphical representation of the process as part of the content could pose problems.

It’s not a guarantee that ChatGPT would be able to help with certain areas of software testing. Performance testing and security testing spring to mind. Certain areas of software testing rely on “domain knowledge” such as financial or medical systems. ChatGPT doesn’t at this stage have that knowledge.

Above all ChatGPT can’t make a judgement on the look and feel of a piece of software, if the interface is intuitive to use. It can’t investigate the cause of an error. It can’t judge the importance of an error. A software tester brings their years of experience, training and intuition to imaginatively challenge the functionality of the software in ways that only a human can.

The Future

From where we were six months ago to now the progress made in large language models as been nothing short of game changing. The applications for this technology are only just beginning to emerge and more developments may be just over the horizon. Once these tools are better understood, more refined and better integrated they surely must change the way we work in software testing. Given what has gone before it would be foolish to make any firm predictions for the future, indeed last October it was impossible to forecast what was coming in November. So, I thought I’d ask ChatGPT what it thinks…